With improvements in sensor performance, a modern CCTV security camera genuinely has the potential to replace a human observer at the scene.

The new generation of security cameras has Wide Dynamic Range (WDR) capabilities which deliver dramatic improvements in visual quality but understanding their performance is about much more than how many dBs they can handle. This article discusses what we mean by WDR and how, by combining advanced low-noise sensor technology with sophisticated image processing, the full range of real-world environments can be successfully captured and displayed.

By Dr. Michael Tusch

|

|

|

|

Scene captured using typical image processing pipeline (Photo by Apical Limited) |

Same scene captured with pixel-by-pixel dynamic range correction (Photo by Apical Limited) |

The latest security cameras take full advantage of recent advances in digital imaging technology, and increasingly incorporate intelligent algorithms based on motion and object detection to automate the surveillance process. However, we may still ask the following question:

Why is it that even the best CCTV cameras often produce rather washed-out and low contrast images under non-ideal viewing conditions?

A related question relates to the performance of Wide Dynamic Range (WDR) functions, which are invoked to allow the camera to handle conditions of extreme illumination:

In judging WDR performance, is a higher "WDR value" in dB necessarily better?

The answers lie in the difference between what is captured by the camera, and what can be displayed or processed. Understanding this distinction, and proposing how both parts can be optimized, is the goal of this article.

ESSENTIAL CAPABILITY

If the ultimate aim of a security camera is to replace a human observer at the scene, a WDR capability is essential. Real environments present a very wide range of illumination levels and the human visual system is highly effective at extracting information in all but the most extreme conditions. A camera must do the same, or better, if its performance is not to vary strongly depending on its location, the time of day, and the ambient lighting conditions.

While a combination of low noise CCD or CMOS sensors and an intelligent auto-gain algorithm is readily able to adjust camera sensitivity between day and night conditions, it is scenes in which very bright and dark areas co-exist that present the most serious challenge. These occur frequently in real situations: at dawn and dusk; in direct sun with deep shadows; under backlit conditions found frequently in entrance areas; at night under directed artificial illumination.

In such environments, a camera needs to perform the following functions:

¡ß Capture as much of the original scene as possible

¡ß Produce a natural-looking image

¡ß Enhance detail in shadow and highlight areas

¡ß Ensure that all relevant information is preserved on standard 8-bit output

Effective performance is the result of both the sensor technology and the signal processing which is applied to the raw captured image stream. Often, this performance is characterized by a simple measure of dynamic range measured in decibels (dB). However, as we will show in the rest of the article, this number is only part of the story and does not tell us how useful the WDR images will be for later analysis, whether human or automated.

The WDR capability is actually a combination of two distinct technologies: a sensor which can capture a wide range of intensities (luminance values) with good signal-to-noise ratio; and a dynamic range compression engine which can convert this raw data into a range suitable for display or transmission.

CAPTURE VS. DISPLAY

Of course, the sensor in a WDR camera is a key component -- but probably not the most important one. This may be a surprising conclusion: surely, if my camera has a sensor which can capture a very wide range of intensities between very dark and very bright, with high signal-to-noise ratio, you can expect to have a good WDR camera.

And indeed there exists a variety of such sensors, including low-noise CMOS and CCD sensors, custom pixel sampling technologies, and multiple-exposure systems, which achieve this performance. Such sensors are able to extend the captured dynamic range from the 60dB of a conventional CCD sensor up to almost 100dB, effectively extracting 100 times as much information from the scene.

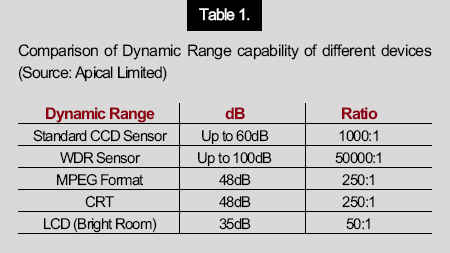

Consider the following, however. The range of intensities captured by such a sensor may be up to 100dB (around 50000:1). A typical display is able to render around 48dB (less than 500:1), and under non-ideal conditions (LCD panel, bright ambient conditions), around 35dB (50:1).

Clearly therefore, in order to see the details captured by the camera, it is necessary to map this very wide range of raw data onto a much smaller range, without any loss of information. This processing must be done inside the camera, because standard 8-bit video (whether analogue us or compressed digital such as H.264) can hold only 48dB dynamic range. Any visual information outside this range when the video stream is compressed can never be recovered by any amount of subsequent post-processing.

Figure 1 summarizes the twin aspects of dynamic range which are important to any security camera installation.

Basically, the problem is that the real world has a much wider range of illumination levels than we can reasonably handle in transmission, storage and display of video imagery.

Interestingly, the human visual system has the same problem: transmission of image data along the optic nerve is quite slow compared to a typical LAN, and yet we still see far better than the best CCTV camera. And indeed, by reproducing some aspects of human visual processing, it is possible to solve this problem very effectively.

|

Figure 1. The twin stages of image capture and reproduction requiring WDR processing (Source: Apical Limited)

|

|

Figure 2. Example of a pixel-by-pixel WDR processing algorithm, iridix (Source: Apical Limited) |

DYNAMIC RANGE COMPRESSION

The mapping of the very wide range of real-world intensities, as captured by a camera sensor, to a range suitable for transmission or display, is know as Dynamic Range Compression (DRC). An example is shown in Figure 2, where the compression of dynamic range results in information becoming visible which was previously below the darkest level which the display or print medium can render and was therefore clipped away.

Many WDR sensor systems combine image capture with DRC (for example, logarithmic sensors). Others use simple non-linear conversion such as gamma correction to effect this mapping. A typical WDR camera may effect a conversion from internal 60dB dynamic range to 48dB NTSC or H.264 output using gamma correction.

Using a DRC algorithm as above, it is quite correct to say that 60dB of WDR data is output by the camera. However, we may ask: what is the quality of the video picture?

At this point, we must look beyond simple numerical measures of WDR capability. We must consider global and local contrast, color fidelity, preservation of fine details and effective resolution.

Put simply, conventional DRC processing degrades global and local contrast, washes out color and reduces apparent sharpness through loss of contrast in fine details. The result is an image which has lost information in bright and dark regions, has unnaturally grey colors, and appears to be less sharp than the original raw image.

So, although the full range of sensor data is preserved, the quality of that data is lost. And the above qualities are important for CCTV applications: for example, the ability to process strong backlit conditions, capture of fine facial or alphanumeric details, realistic color reproduction. However, going beyond such technology is a challenging task, and in fact the development of advanced DRC algorithms is a hot research topic, both in universities and industry.

It is demanding because it requires a combination of the following key characteristics:

¡ß Strong dynamic range compression

¡ß Natural appearance

¡ß No generation of false contours, edges or other artefacts

¡ß Preservation of color and detail

¡ß Efficient DSP or IC implementation

On such algorithm is Apical¡¯s iridix, which mimics the behaviour of the human visual system and the effect of iridix is shown in Figure 3. It works in a completely different way to normal gamma-based correction, which applies a single correction curve to a video frame, based on a measurement of how much of the image is too bright and how much too dark.

iridix instead calculates a different correction curve for every pixel in every frame of the video stream. This means that parts of the image which are very dark, and would normally be invisible on conventional output, can be strongly enhanced and brought within display range, while other over-saturated regions can be reduced in brightness and hidden detail revealed. And it can do this without generating strange visual effects like haloes and false edges, which could otherwise disturb analysis and render security footage unreliable.

In summary, iridix adapts automatically and instantly to changing conditions, with the result that all parts of the image are always in balance.

Into this DRC engine, modules are added which preserve color and fine detail. Motion-based temporal noise reduction and spatially-varying frame-based noise reduction are also optionally implemented to improve source signal-to-noise ratio and extend effective source dynamic range.

The result is a very different appearance from current WDR camera systems. For example, Figure 4 shows how the original image captured by the WDR sensor (left) can be enhanced to reveal the subject in shadow, without affecting in any way the bright background region which is already well balanced. The resultant image is much closer to what a human observer would perceive.

The effect of fine detail preservation is also crucial. Compare for example the images in Figure 5, which are details cropped from a larger image. Both are obtained from the same WDR source image: the first has conventional correction, the second uses iridix correction. It is clear that that person is easier to identify from the second frame than the first.

To achieve these results, the engine has to handle a great deal of data very quickly. Unlike standard 8-bit data typical of standard video applications, up to 16 bits per pixel are processed a range more typical of professional movie post-processing, where servers might run for minutes or hours on a single frame.

Despite this complexity, it is possible to implement these algorithms on the current generation of DSPs in addition to custom ASSPs. In fact, it has become cost-effective to implement complete camera pipelines on programmable devices, combining powerful image processing with audio, networking and H.264 encoding, and intelligent algorithms like object tracking. The flexibility of software processing enables rapid implementation of the latest designs, and the ability to customize to specific sensor models and end-use applications. For example, a single camera may switch between two imaging pipelines: one, for optimal viewing on a standard display; another for input into an automated image analysis package running on a remote server.

With such in-camera processing, the full benefits of high quality optics and sensor technology can be translated into displayed video and used as a source for subsequent image-based applications.

With improvements in sensor performance, it is now possible to capture virtually the whole range of useful image information under even extreme illumination conditions. A modern CCTV security camera genuinely has the potential to replace a human observer at the scene.

However, simplistic processing of the raw data from these sensors leads to significant loss of image quality. We suggest that optimal camera designs should separate image capture (increasing the dB range of the sensor) from dynamic range compression (reducing this range to around 48dB or below). Efficient and powerful algorithms exist to handle this image processing, which can be implemented on DSP as well as FPGA and ASSP architectures.

In summary, when evaluating a WDR camera, don¡¯t look just at the dBs. Check out the picture quality visually, and ask: how close is this to what I see with my own eyes? Using the latest technology, it should be close indeed.

|

Figure 3. Example of a pixel-by-pixel WDR processing algorithm, iridix (Source: Apical Limited) |

|

Figure 4. Still frame from a security camera (left) and the same camera incorporating iridix WDR processing (right)

(Source: Apical Limited)

|

|

Figure 5. Effect of conventional WDR processing (left) and pixel-by-pixel processing (right) (Source: Apical Limited) |

Dr. Michael Tusch is CEO of Apical Limited (www.apical-imaging.com).

For more information, please send your e-mails to swm@infothe.com.

¨Ï2007 www.SecurityWorldMag.com. All rights reserved.

|